How to Copy Large Files over VPN or Other Unreliable Network Connections

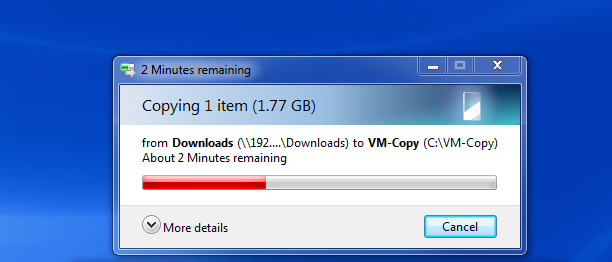

Large file transfer over VPN is a problem for many companies for a few reasons, transfer is unreliable, VPN traffic kills the Internet connection, and it is unproductive. This article will show you how to copy large files over VPN or other unreliable network connections, and some of the best software to deal with this, … Read more