Large file transfer over VPN is a problem for many companies for a few reasons, transfer is unreliable, VPN traffic kills the Internet connection, and it is unproductive. This article will show you how to copy large files over VPN or other unreliable network connections, and some of the best software to deal with this, and best practices to deal with large file transfer, and how to ensure file integrity. These are, in my experience, the best way to do it. You have to evaluate yourself, if they work in your environment, and test a lot.

When copying files over VPN, there are a few problems that need to be addressed:

- file transfer can be easily interrupted,

- over saturating the VPN connection,

- redirecting all the available traffic to the VPN connection

- ensuring the transferred file is undamaged

Let’s talk a bit about all of these, why they are important, and how they affect the success of your file copy. This will give you, hopefully, a clearer image of the process.

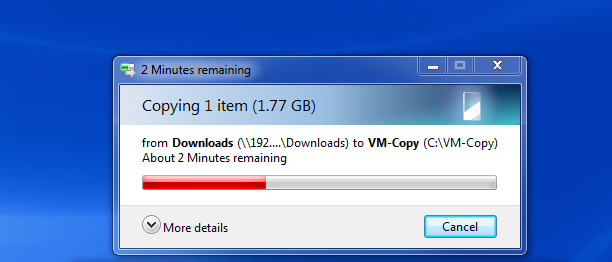

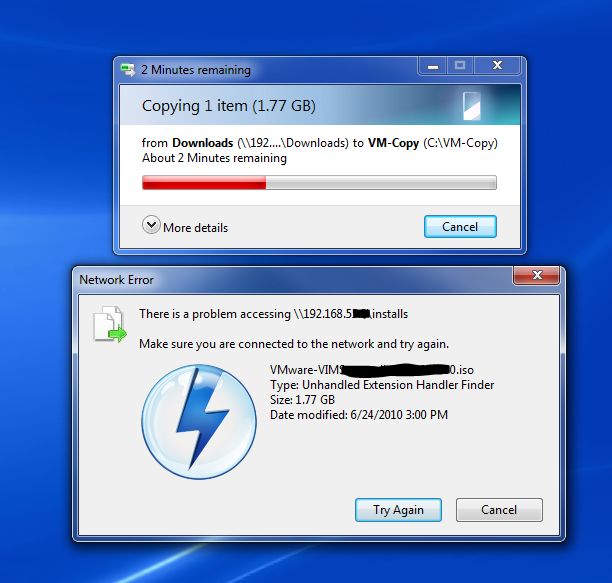

Network Interruption – File Transfer Failed

When we talk about large file transfers, an interruption after a few hours of transfer is not a good thing, and if you take in consideration the time spent, and to only realize you have to do it again because the transfer failed. There are many reasons for the network to interrupt, and even a second is enough to corrupt your file. VPN is prone to network interruptions with large files, because it saturates the Internet bandwidth, and when other VPN clients try to use the VPN tunnel, the file transfer will be interrupted.

The fix for this is some kind of transfer resuming at both the server level and the client level. A few client-server protocols that support file resuming are: SMB, FTP, HTTP, rsync.

The easiest way to implement file transfer resuming in a corporate environment is through SMB and robocopy. Robocopy has a feature to copy in restartable network mode, so if the network goes down, it will automatically resume the transfer from where it left. The robocopy option to copy in restartable mode is /z as with the regular copy command.

robocopy /mir /z X:\source-folder\ \\RemoteServer\RemoteFolder

Rsync has also a resume option, and it works great, but you have to make sure you use the network filesystem and not a locally mounted network directory. The command to transfer will be like this:

rsync -aP juser@server:/RemoteServer/Directory /Home/Local-Directory

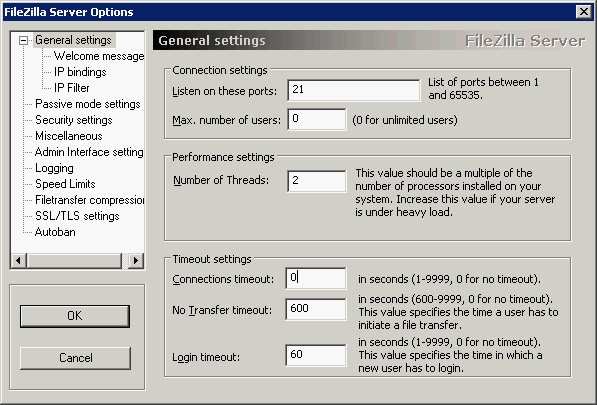

Filezilla has an option to resume files after interruption, but there is a timeout setup by default. Make sure you set the timeout to 0, so that you can recover even after a few hours with no connection between server and client. Note, that this is not a good option if you have many clients, since it will keep open connections indefinitely.

Apache has also an option to allow file resuming, which is enabled by default. The disadvantage with Apache is that, by default it doesn’t support file upload. If you are determined to use Apache for this though, there are some ways to do it, you can start your research here: File Upload plugin for Apache

Over Saturation of the VPN Connection

The VPN connection is there for many users, don’t think that your file is the most important think in the world. If you take all of the bandwidth for your file transfer, other users might not perform daily important tasks.

The over saturation of the VPN connection can be avoided by implementing bandwidth limiting at the software level.

You can do that with SMB by using robocopy, at the client level. The command will look like this:

robocopy /mir /IPG:250 X:\source-folder\ \\RemoteServer\RemoteFolder

The IPG parameter is the one that controls the bandwidth; it’s the acronym for Inter Packet Gap, and with an IPG of 250, the transfer rate on a 100 MBps network is around 12.7 MBps. The lower the IPG, the higher the bandwidth saturation. The transfer rate will be different for different network speeds.

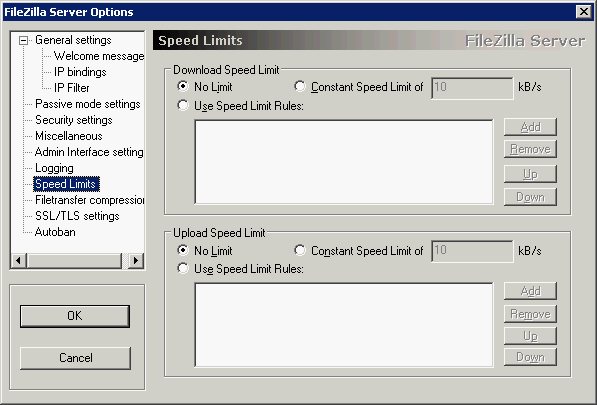

With FTP is going to be at the server level, it’s very simple if you use Filezilla FTP server. Just restrict the bandwidth to a safe limit, (note that there is no restriction in the picture).

Apache uses mod_ratelimit to control the bandwidth of its clients. For more information about that take a look at this page:

http://httpd.apache.org/docs/trunk/mod/mod_ratelimit.html

Rsync can also limit the bandwidth at the client level, and the option will be “–bwlimit”. A command to synchronize two folders with rsync, throttling the bandwidth, would look like this:

rsync –bwlimit=3000 /local/folder user@RemoteHost:/remote/backup/folder/

The 3000 means 3000 kbps. IMPORTANT, if you use

Control VPN Traffic

It looks like a simple decision to allow all the traffic possible through the VPN, most companies will determine that VPN traffic has the highest priority. However, in real life there are many non VPN applications that are run from the Internet, and are critical for a business. Booking a flight ticket, using a hosted web application, getting your emails from a hosted email server, etc… So it might make sense to control the maximum bandwidth used by the VPN, and this is especially needed in environments where large file transfers over VPN are very common. The best way to control this is through firewall policies to limit the bandwidth for the VPN destination. On some devices, like the Fortigate firewalls I am using, this is called traffic shaping. On other devices might be named differently.

File Integrity Verification

There is a mechanism for checking the file integrity with robocopy and rsync.

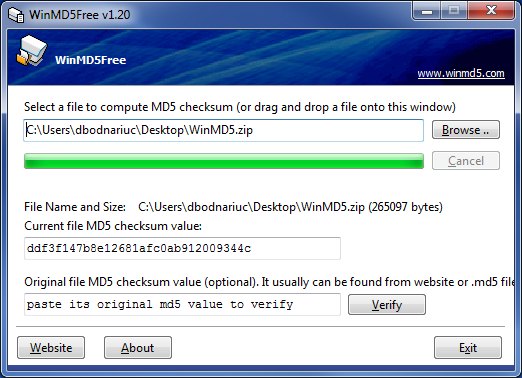

The perfect tool to make sure your file is identical with the remote one is to check with md5. On Linux this is a package that comes by default in many distributions, on Windows you can use WinMD5, that you can download it here: WinMD5